Big Codebase Vibes: Taming the 14M LOC Monorepo

In modern software engineering, we often know what needs to change, but in a massive polyglot codebase, the "where" and "how" are the real killers. At our scale—navigating over 14 million lines of code across JavaScript, Python, Java, and Go—no single engineer has the full map in their head.

When a Customer Escalated Bug hits your desk, the first question isn't "How do I code this?" but "Is this a frontend issue, a backend bug, or an infra mismatch?" Standard LLM chat windows fail here. They lack the "sensory organs" to navigate deep complexity. To solve this, we’ve moved beyond simple chat interfaces to a tiered AI assistance strategy using Claude Code, Model Context Protocol (MCP), and a Hierarchical Documentation strategy.

The Stack: Bridging the Context Gap

To navigate a codebase this dense, we need tools that act as agents, not just text generators. We use the Model Context Protocol (MCP) to give Claude direct access to our environment.[2]

| Tool | The Problem | The "Superpower" |

|---|---|---|

| Serena (MCP) | Context Window Limits | Semantic Navigation. Instead of reading every file, it indexes symbols to "jump to definition" across the repo without burning tokens. |

| Agent-Browser | Data Silos | External Sight. Allows the AI to view web UIs, Grafana dashboards, and live documentation not checked into git. |

| CLAUDE.md Hierarchy | Tribal Knowledge | Bottom-Up Memory. Recursive documentation capturing architecture and conventions at the folder level. |

| Skills | Workflow Friction | Top-Down Behavior. Specialized instructions for repetitive tasks (e.g., "Deploy to Stage"). |

The Methodology: Top-Down vs. Bottom-Up

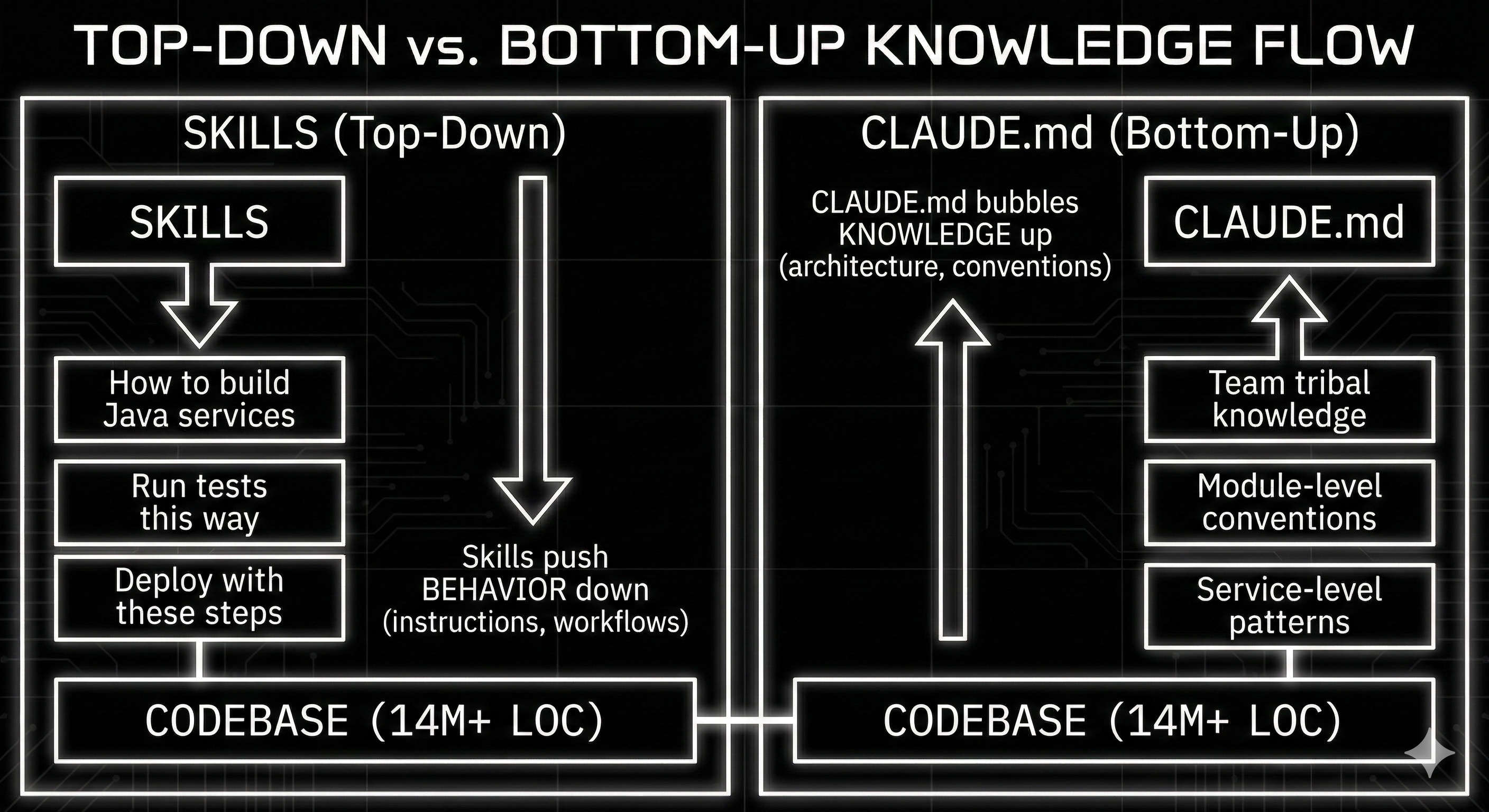

The core of our approach is balancing Behavior (what the AI does) with Knowledge (what the AI knows). This mirrors the emerging industry standard of separating "Skills" from "Context."

1. Top-Down: Skills as Mental Frameworks

Skills are logical groupings of capabilities. Instead of manually explaining how to build a Java service every

session, we define a java-service skill.

When Claude enters a terraform-infra context, it automatically loads the "Infra Skill," which

forbids manual console changes and forces

plan/apply workflows.

2. Bottom-Up: The CLAUDE.md Hierarchy

Because 14M lines of code is too much for one index, we use a recursive documentation strategy known as Progressive Disclosure.

- Root CLAUDE.md: High-level architecture and links to domains.

- Domain CLAUDE.md: Specific patterns for infra or services.

- Service CLAUDE.md: The "ground truth"—local build commands and gotchas.

This prevents "Context Pollution." When working on the frontend, Claude doesn't need to know the Terraform state locking mechanism. It only loads what is relevant to the current directory.

The "Fix" in Action

We recently faced a complex bug involving a specific service failing silently in production. The old way involved 4 hours of grepping logs and checking three different repos. The Agentic way was different:

- Agent-Browser checked the live Grafana dashboard to pinpoint the error timestamp.

- Serena traced the error ID across the

java-serviceandpython-mlrepositories, identifying a schema mismatch. - Claude Code proposed a PR that updated the schema and patched the Terraform config to redeploy consumers.

Future Work: The Agentic Horizon

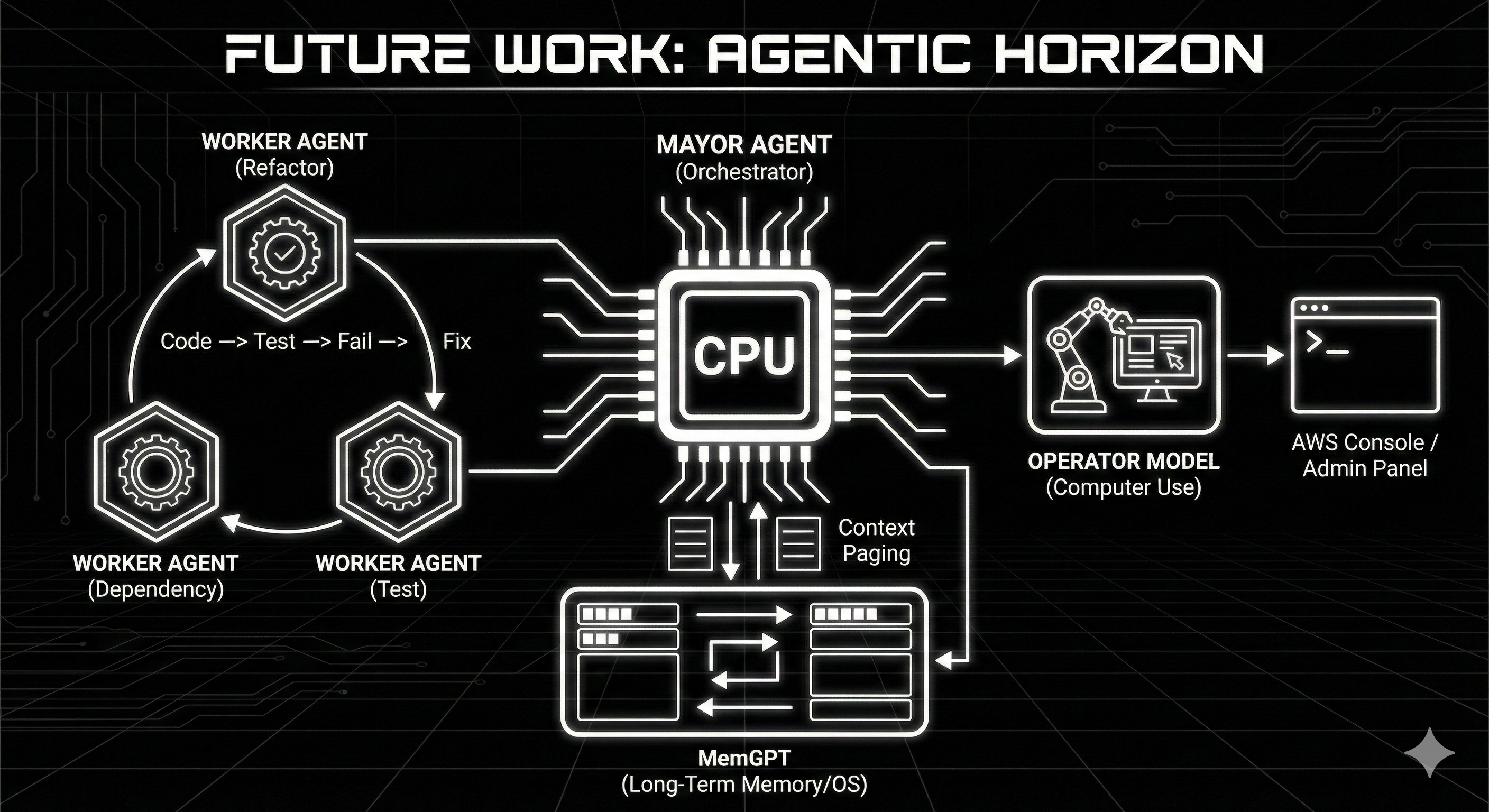

While our current stack has 10x'd our ability to navigate the codebase, we are now looking at how to industrialize the actual coding process. We are moving from "Assistant" models to "Orchestration" models.

1. From "Chat" to "Gastown" (Multi-Agent Orchestration)

The next bottleneck isn't generating code; it's managing the agents generating the code. We are exploring frameworks similar to Steve Yegge’s "Gastown", which treats the development environment like a factory rather than a chat window.[1]

Instead of one developer chatting with one Claude, we use a "Mayor" agent to coordinate multiple "Worker" agents. You tell the Mayor, "Refactor the auth module," and it spins up three specialized agents: one to map dependencies, one to write the interface, and one to run the test suite in a loop.

2. Infinite Context with MemGPT (Letta)

Currently, every session starts fresh. We are experimenting with MemGPT (now Letta) to act like an OS for our agents, paging memories in and out of the context window. If Claude learns a specific quirk about our Terraform setup, it writes it to long-term storage, allowing a different session two months later to recall that fact.